Environment Formulation (MDP)

The environment is modeling by MDP (Markov Decision Process). MDP is just one way to model the environment, which means that it’s not a nessesary part for RL. There are also multiple mathmetical ways to model.

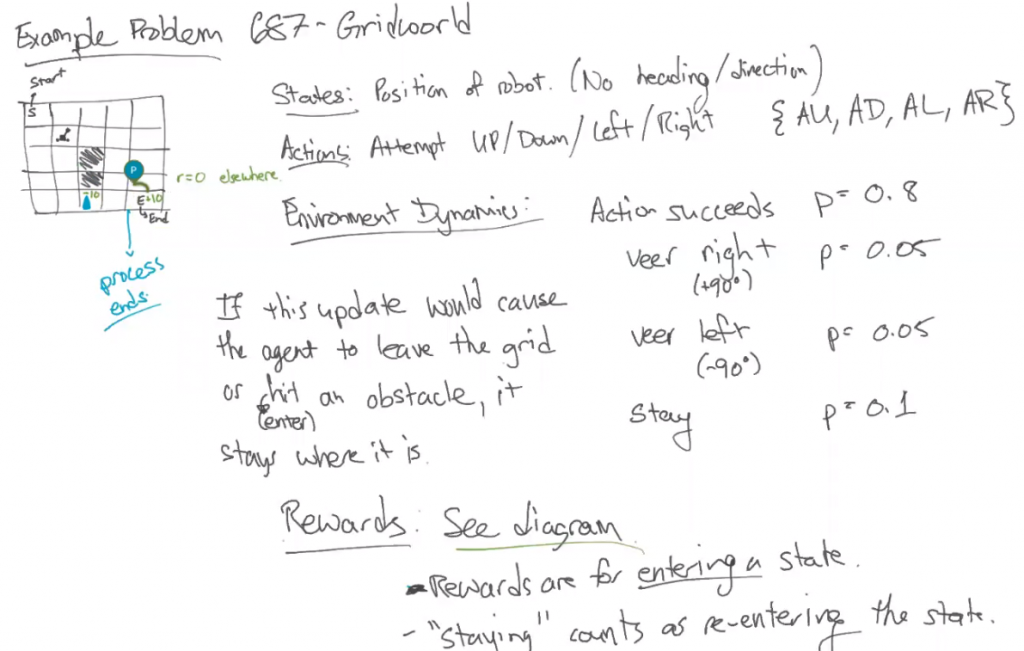

$ \mathcal{S} = $ Set of possible states of the environment. (Finite for now. It is a “state set”.)

$ \mathcal{A} = $ Set of possible actions. (Finite for now, “state set”.)

$ p =$ “Transition function”, which descripes how the state of the environment changes. Input: $\mathcal{S}, \mathcal{A}, \mathcal{S’}$ (next state). Output: Set of Possibilities [0,1]

$P(\mathcal{S}, \mathcal{A}, \mathcal{S’}) \triangleq Pr(S_{t+1} \in \mathcal{S’}|S_t = s. A_t = a$, for $\forall \mathcal{S}, \mathcal{A}, \mathcal{S’}, t$

Deterministic transition function: $P(\mathcal{S}, \mathcal{A}, \mathcal{S’}) \in \{ 0,1\}$

$d_R = $ Conditional distribution of $R_t$ given $S_t, A_t, S_{t+1}$

$ R_t $~$ d_R (S_t, A_t, S_{t+1}) $

Reward function: $R(s,a) \triangleq \mathbb{E} \{R_t|S_t = s, A_t = a\}$ (Expected value for $\mathbb{E}$) — R is not random variable.

$d_0 = $ “initial state distribution” $d_0: \mathcal{S} \rightarrow [0,1]$$d_0{s} \triangleq Pr(S_0 \subset S)$

$\gamma \in [0,1]$ is the “reward discount parameter”

Agent Formulation (MDP)

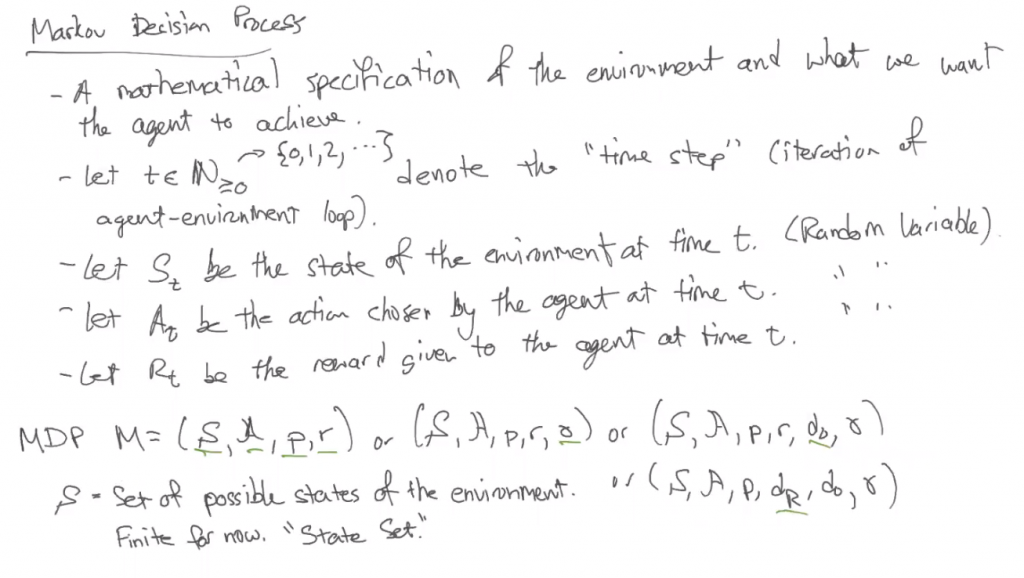

Policy: The mechanism within the agent that determines which action to take. (Also possibilities depends on the state)

Learning: Corresponds to the agent changing its policy.

$\pi : \mathcal{S} \times \mathcal{A} \rightarrow [0,1]$

$\pi(s,a) \triangleq Pr(A_t=a|S_t=s), \forall s,a,t$

Deterministic policy: $\pi(s,a) \in {0,1}, \forall s,a$

abusing way for notation — $\Pi (s) \in \mathcal{A}, A_t = \pi (S_t)$

In general, you can model everything by MDPs although it may not be the best way to formulate the environment and the agent. The only case that really breaks the MDP setting is non-stationary. So, when something in the env, like, the transition function is changing over time. (Next lecture — Markov property in stationarity)

Example: Policy for 687GridWorld

- Any $|\mathcal{S}| \times |\mathcal{A}|$ matrix where:

- Rows sum to one

- Entries all $\geq 0$